A feminist perspective on data science:

The Feminist Search Tool

by Aggeliki Diakrousi, January 2021

This essay is a reflection on the development of a feminist search tool and my involvement in its technical and conceptual aspects. It refers to the notion of feminist critical computing in public infrastructures and specifically digital libraries. It brings to light the frictions between the technical aspects of a digital infrastructure and the societal issues it comes to address. This essay studies one1 of the prototypes of the Feminist Search Tool (FST), a collective artistic project that explores ways of engaging with items of digital library catalogues and systems of categorization2. The specific FST I worked on is a digital visualization tool that engages with the collection of the International Homo/Lesbian Information center and Archive (IHLIA) using terms from their Homosaurus, a standalone international LGBTQ linked data vocabulary that is used to describe their collection3. This essay highlights the tensions between technical restrictions and research questions within the working group of the FST.

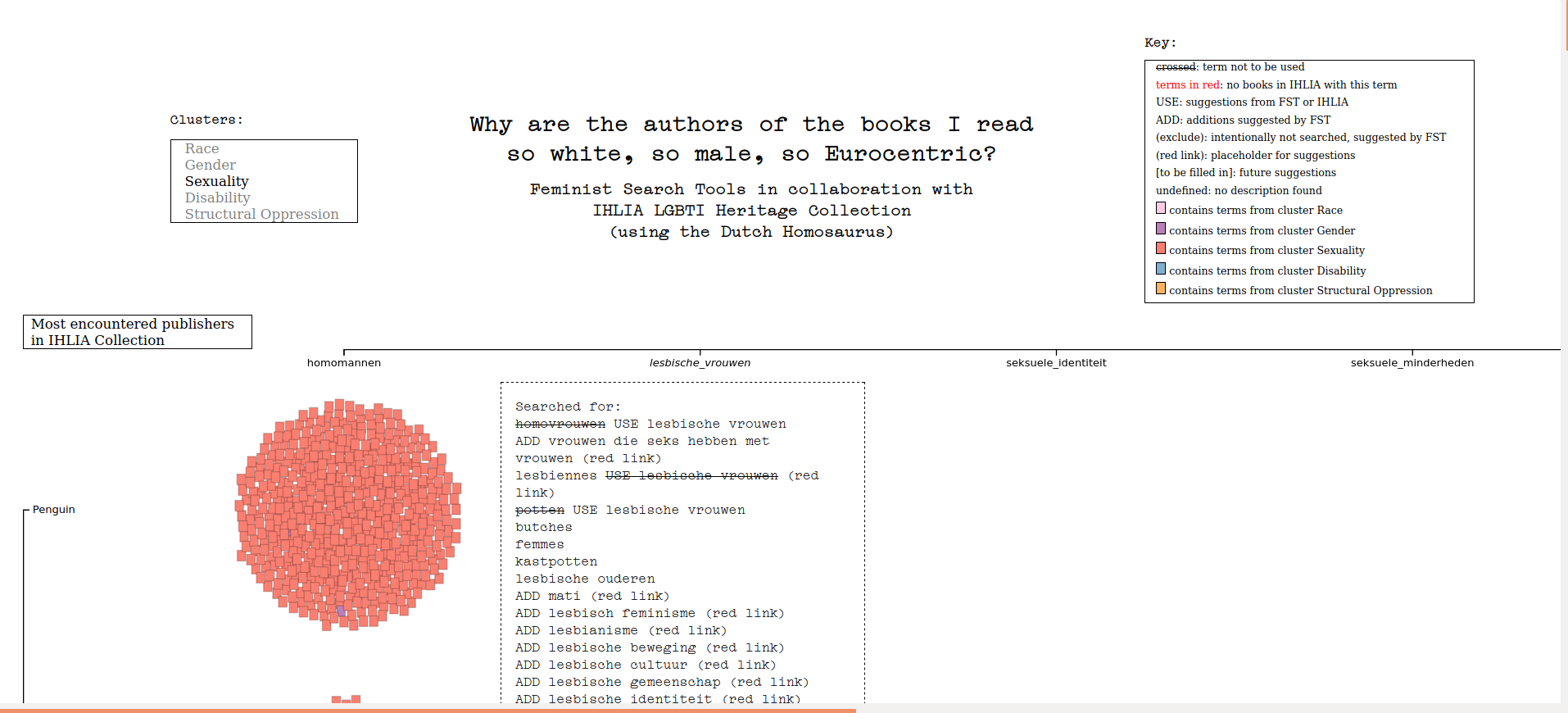

One such question initiated the FST group's collective research: “Why are the authors of the books I read so white, so male, so Eurocentric?", implying an absence of diversity due to missing data in libraries. A search tool is a way to bring awareness to these gaps and inclusion and exclusion mechanisms in Western knowledge collection and distribution systems. The project challenges categorization protocols in libraries and the question of who holds the power and responsibility for determining the search and retrieval process: the readers, the users or the librarians? The algorithms, or the libraries? And in the end, "what do we change, delete and keep, the users or the researchers and the library or the algorithm? Can we become aware of the search engine’s short comings and make them experiential?" (Read-in, 2017). Institutional cataloguing systems have a desire to unify and universalise the collection, which often omits authors from different backgrounds, passes biases and ignores non-Western systems of knowledge. It is often impossible to intervene in the categories from the position of an outsider to the institution. A feminist perspective on data science is critical regarding the missing datasets in such systems. Who is collecting, and what, are both questions that feminist researchers are asking. The field descriptors in the dataset of the books of IHLIA's collection came from the Dutch Homosaurus. This field, that was filled in with the objective choice of the librarians, would mostly refer to the content of the books. The interface of the FST, with five categories to search within ('Race', 'Gender', 'Sexuality', 'Disability', 'Structural Oppression') lists publishers by name vertically and horizontally displays clusters of books labelled with the same term from the Homosaurus. Metadata is visible when hovering over a box that represents each book. The aim of this offline prototype (developed with d3.js) is to visualize the collection's intersectionality, according to the interests of the librarians/researchers in the FST group.

Visualizing data of the collection would allow library users to highlight and question aspects such as diversity of gender and publishers in the catalogue, or the lack thereof. Data visualizations, like all graphs and charts, appear as objective 'proofs' and facts. But these representations are far from neutral. Presentations of data reflect the subjective view of the technical designer, the companies, the political actors, the institutions. The graphic style, the organisational structure and language used to describe them indicate what is the intention, who is privileged and also who is discriminated against. These visualization interfaces display more than fixed information. They are also communication tools, a point that so-called technical masters of data are often unable to take into account. D’Ignazio and Klein (2020, pg. 59) observe that obscure and blurry politics and goals lie behind the technology in the hands of those in power. The people who are the subjects of these graphs are usually not invited to participate in classification processes. Visualization tools remain black-boxed algorithms, indicating problems without offering solutions. The data are often "presented with standard methods [...] and conventional positionings" (D’Ignazio and Klein, 2020, pg. 78). In the case of the FST, we attempted a critical intervention to the x-axis by complexifying it.

In an early prototype of the tool, one x-axis included three different genders to describe the authors (female, male, other). After long discussions we attempted to break with orthodox gender divisions. As it was difficult to automatically pinpoint data on the gender of the authors, we decided to use the descriptor fields from the Homosaurus that describe intersectional aspects of each books' content. Our experiments in 'complexifying' the x-axis included adding context around the terms: potential, suggested, missing (red links) — proposals for new categories that indicate gaps in the library — and related or updated terms. Complexifying also allowed space for uncertainties to exist. Each category has a colour. If a book has a term from another category then it takes the colour of that particular one. In that way we see if its content relates to both race and gender, for example, and so if it addresses more intersectional topics than its counterparts in the same group. While designing the FST tool, long conversations were filled with philosophical, difficult and unsolvable concerns and doubts, transcending our vision of data science beyond the fixed boundaries of categories. Libraries we access are bound by categories that are not flexible nor intersectional, and as a result restrict the potential for new understandings of the books to develop. In the technical development of the project, fellow FST group member Alice Strete and I sought to implement these uncertainties in the software and the interface. How to visualize and communicate uncertainty with an algorithm that can be so certain and fixed? How to allow for human additions, errors and questions? In the same way we transfer societal issues and unjust practices to an algorithm, we can also transfer non-fixed perspectives — gender binary, for example, is a way we have learned to categorize people, often through representation in graphs.

In our conversations for the development and improvement of the prototype we considered context. We had a situated approach that embraced the specific context we are in and what part of the collection reflects the questions we addressed in our discussions. Thus we chose specific terms, categorized them and addressed specific issues. Haraway (1988) has developed arguments around a feminist objectivity which is embodied and depends on the position and situation of the individual. This partial perspective is non-illusionary and provides space for interaction with others’ visions and thus the development of a universal knowledge from the bottom-up. "Data visualization and all forms of knowledge that are situated are produced by specific people in specific circumstances—cultural, historical, and geographic" (D’Ignazio and Klein, 2020, pg. 83). We also added context — visible when hovering over each term on the x-axis — related to terms that we would like to update because they are no longer socially acceptable, interventions from the group that are not necessarily connected to the Homosaurus and definitions that expand the meanings and the potentialities of the term.

During the process of developing this tool there were many tensions between our conceptual visions and the algorithm. In order to bridge this gap there were many steps back and forth between what we desired and the technical restrictions the algorithm presented. Our imaginations ran faster than our technical capabilities, but also operating within the constraints of how an algorithm is structured requires significant time and a step-by-step approach. The building of the algorithm had another series of discussions, solving issues and fixing errors, thus it had a slow rhythm that we allowed to exist. Within a culture based around debugging, often problems with tools are addressed in ways that allow only fast fixes. In a counter approach to this techno-solutionist drive, we wanted to explore other paths that allow an openness and dialogue between technicians, algorithms and librarians with all their slowness, difficulties and amateur knowledge. This may result in a tool that is less 'clean' and more chaotic than what is developed within the professional tech industry but it visualizes a situated moment of an ongoing process intervening in a particular dataset. The FST gave me an insight into how software practices influence society through the way public collections are categorised. In my research I use different feminist pedagogies and various methodologies to engage many people in the technical aspects of a tool and this approach is also used by the FST. These people included those that are related to the tool but don't have the knowledge of how it functions and are newcomers or technically non-experts, such as amateur librarians, readers, researchers and authors. D’Ignazio and Klein (2020, pg. 58) find community engagement important to move on from an endless loop of just highlighting the problem through data visualization, to an era of data justice and change. FST makes documentation of the software public and organises workshops and reflection meetings to map and open up exchanges of technical knowledge and experiences. In this way, power is shared through experiential ways of knowing.

Data science is commonly perceived as an abstract and technical realm. Discussions on social context, ethics and politics of data and communities are less involved and data science seems like a mastery difficult to grasp. Thus only big tech industry operators, institutions and political actors can make the decisions for infrastructural development. My experience working between technical and cultural concerns has showed me that a multidisciplinary approach can be constructive and democratic. Educational and artistic tools bridge different fields and communities of people, those who are included and excluded within the datasets maintained by western society. Intersectional and feminist approaches to data infrastructures, allow the conflicts and uncertainties that open more paths for a hybrid technology.

academic references

-

D’Ignazio, C. and Klein, L. F. (2020) Data Feminism. Cambridge, Massachusetts: The MIT Press.

-

Haraway, D. (1988) ‘Situated Knowledges: The Science Question in Feminism and the Privilege of Partial Perspective’, Feminist Studies, 14(3), pp. 575–599. doi: 10.2307/3178066.

-

Read-in, 2017, Feminist Search Tool, viewed 23 December 2020, <https://read-in.info/feministsearchtool/>

footnotes

-

This prototype was developed between May-November 2020 and was supported by the Creative Industries Funds. ↩

-

The project is part of a long-term collaboration between the two collectives Read-in and Hackers \& Designers. The Feminist Search Tools work group consists of: Read-in (Annette Krauss, Svenja Engels, Laura Pardo), Hackers \& Designers, (Anja Groten, André Fincato, Heerko van der Kooij, and and previous member James Bryan Graves), Ola Hassanain, Angeliki Diakrousi and Alice Strete. More information about it here: https://feministsearchtool.nl/ and https://fst.swummoq.net/ ↩

-

More about IHLIA: https://www.ihlia.nl/ and Homosaurus: https://homosaurus.org/ ↩